Restoring Sight to the Blind

info@sight2blind.org

|

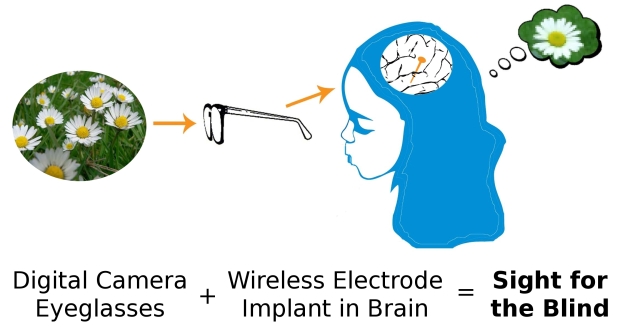

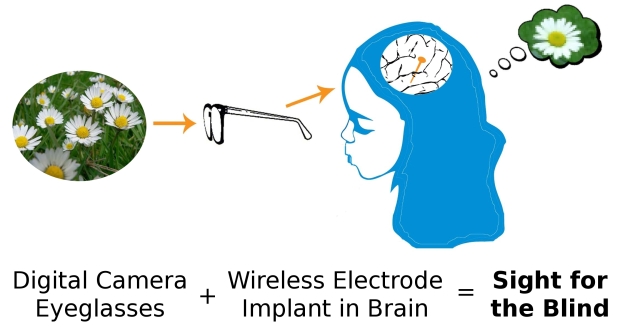

Welcome to the home page of the Thalamic Visual Prosthesis Project. ¤ Support our work. We rely on donations from individuals and foundations to support our important research to restore sight to the blind. If you are interested in making a tax-deductible gift, visit https://giving.massgeneral.org/donate/pezaris-lab. Any amount helps. Please inquire with your employer's HR department if they match charitable donations. And thank you. ¤ The fundamental idea we are pursuing is to provide restoration of sight to the blind. We hope to accomplish this by implanting multi-wire electrodes in the lateral geniculate nucleus (LGN), the part of the thalamus that relays signals from the retina in the eye to the primary visual cortex at the rear of the head. In leading causes of blindness, the eye ceases working as a light-sensitive organ, but the remainder of the visual system is largely intact. By sending signals from an external man-made sensor such as a digital camera into the brain through carefully implanted electrodes in the LGN, we hope to provide a crude approximation to normal vision and restoration of sight to the blind. It is important to understand that we do not anticipate restoring vision that is in any way close to normal. Our best guess is that a visual prosthesis will provide the patient with an improvement in their quality of life, being able to navigate more easily through familiar and perhaps unfamiliar surroundings. We hope that it will allow the patient to distinguish and identify simple objects, perhaps even help recognize people. But, it is important to understand that these hopes are some time to come. There is a tremendous amount of work to be done before we have even the crudest initial experimental device temporarily implanted in a human. ¤ We have published a scientific paper describing our first high-profile results:

J. S. Pezaris and R. C. Reid, "Demonstration of artificial visual percepts generated through thalamic microstimulation," Proceedings of the National Academy of Science, 104(18):7670-7675, May 1, 2007 [PDF] ¤ Here are a few selected examples of the press coverage on the paper: The Economist

¤ The following illustration depicts a schematized version of an initial device

and shows the basic elements of the design.

¤ The first of two small movies accompanying the article and press coverage helps understand what the animals are doing in this experiment.

¤ The second of the two movies helps us understand what prosthetic vision might appear like to the patient. This could be called an artist's rendition of the experience. There are numerous assumptions that underlay the simulation, many of which are likely incorrect; this movie should serve only as a guide.

¤ We have published a second scientific paper showing progress on the design parameters of a device:

J. S. Pezaris and R. C. Reid, "Simulations of electrode placement for a thalamic visual prosthesis," IEEE Transactions on Biomedical Engineering, 56(1):172-178, 2009 [PDF] ¤ We have published a scientific paper discussing possible modes of bringing signals into the brain, specifically using a thalamic visual prosthesis as an example of the larger field of computer-to-brain interfaces:

J. S. Pezaris and E. E. Eskandar, "Getting signals into the brain: Visual prosthetics through thalamic microstimulation," Neurosurgical Focus, 27(1):E6 2009 [PDF] ¤ Recently, there has been an effort to simulate how prosthetic vision will appear to the eventual recipient of an implant by using virtual reality technologies. We developed a simulation and used it to assess the visual acuity that would be available using a suite of different designs:

B. Bourkiza, M. Vurro, A. Jeffries, and J. S. Pezaris, "Visual Acuity of Simulated Thalamic Visual Prostheses in Normally Sighted Humans," PLOS ONE, 10.1371/journal.pone.0073592 ¤ Continuing in that line of research, we adapted the simulation so that it could be used to test reading ability. Reading is one of the standard activities of daily living, and lends itself to easy measurement and analysis, as is detailed in our most recent publication:

M. Vurro, A. M. Crowell, and J. S. Pezaris, "Simulation of thalamic prosthetic vision: reading accuracy, speed, and acuity in sighted humans," Frontiers in Human Neuroscience, 10.3389/fnhum.2014.00816Here is a movie from that study that shows one of our subjects reading the sentence "Ten different kinds / of flowers grow by / the side of the road" out loud using a simulated prosthesis. The subject's eye position is shown by the red circle, but was not visible to them during the experiment. The pattern darts about the screen as the subject looks from word to word. It might seem amazing that they were able to read at all; we have found a large gap between what someone watching one of these experiments understands and what the subject performing the experiment experiences. The simulated phosphene vision here has many more phosphenes (four thousand) than would be available from the proposed device, but we also tested lower resolution versions. Watch it a few times, and you'll start to see the words better, but keep in mind the person in this example had never used the simulation before.

¤ In preparation for implanting a first-generation prototype in an animal model, we have been training monkeys to perform the same letter recognition task that we did with humans in the Bourkiza, et al. work above. While monkeys do not understand images of letters the way that humans do, they are very capable of distinguishing arbitrary visual shapes. We use letters as a convenient set of arbitrary shapes because they allow us to directly compare animal results with human results. During the training, we had the animals perform exactly the same task as before, in a simulation of artificial vision. Because monkeys learn this task much more slowly than humans, the training gave us an opportunity to study the learning of the task in very fine detail.

Killian NJ, Vurro M, Keith SB, Kyada M, Pezaris JS, "Perceptual learning in a non-human primate model of artificial vision," Scientific Reports, 10.1038/srep36329 ¤ To answer questions about how to design post-implant training for future human receipients of a device, we had six normal, sighted subjects come to the laboratory every day for 40 sessions, and read with the task from Vurro, et al., above. With 15 or so minutes every day, our subjects were able to increase their performance an equivalent of doubling the number of phosphenes. While we expected there to be an improvement with training, we were somewhat surprised at the amount of improvement we observed. We also saw dynamics that were highly similar (although substantially sped up) to the monkey learning dynamics from Killian, et al., above.

Rassia KEK, Pezaris JS, "Improvement in reading performance through training with simulated thalamic prostheses," Scientific Reports, 10.1038/s41598-018-31435-0 ¤ Preparing for an upcoming experiment on the technical aspect of whether visual prostheses need to be sensitive to eye position, we performed a literature review on the subject that strongly suggests the answer to our question is most certainly affirmative (visual prostheses do need to be sensitive to eye position).

Paraskevoudi N, Pezaris JS, "Eye Movement Compensation and Spatial Updating in Visual Prosthetics: Mechanisms, Limitations and Future Directions," Frontiers in Systems Neuroscience, https://doi.org/10.3389/fnsys.2018.00073 ¤ And results from that experiment show that our hypothesis was correct: not compensating for eye positions is highly detrimental to a subject's ability to read through a simulated visual prosthesis.

Paraskevoudi N, Pezaris JS, "Full gaze contingency provides better reading performance than head steering alone in a simulation of prosthetic vision," Scientific Reports, https://doi.org/10.1038/s41598-021-86996-4 ¤ We have reviewed the literature surrounding a technical issue with regard to how stimulation is applied to electrodes in a visual prosthesis. Specifically, whether applying stimulation across electrodes at exactly the same time (synchronously) or in waves (sequentially) makes a difference in perception. In the review, we present four outstanding questions and propose an experiment to address the most pressing one.

Moleirinho S, Whalen AJ, Fried SI, Pezaris, JS, "The impact of synchronous versus asynchronous electrical stimulation in artificial vision," Journal of Neural Engineering, https://iopscience.iop.org/article/10.1088/1741-2552/abecf1 ¤ Finally, there is a TEDx talk that gives an overview of our research. |